Algorithms that use the brain’s communication signal can now work on analog neuromorphic chips, which closely mimic our energy-efficient brains.

Today’s most successful artificial intelligence algorithms, artificial neural networks, are loosely based on the intricate webs of real neural networks in our brains. But unlike our highly efficient brains, running these algorithms on computers guzzles shocking amounts of energy: The biggest models consume nearly as much power as five cars over their lifetimes.

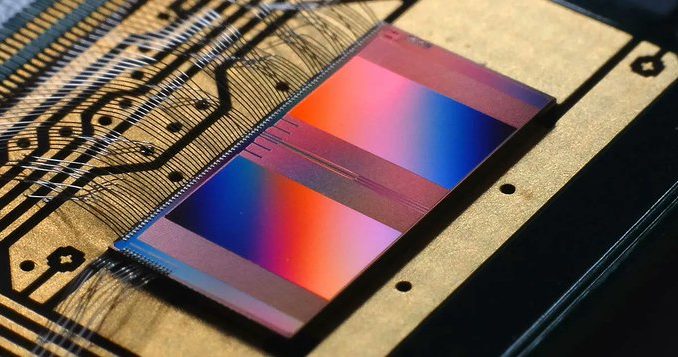

Enter neuromorphic computing, a closer match to the design principles and physics of our brains that could become the energy-saving future of AI. Instead of shuttling data over long distances between a central processing unit and memory chips, neuromorphic designs imitate the architecture of the jelly-like mass in our heads, with computing units (neurons) placed next to memory (stored in the synapses that connect neurons). To make them even more brain-like, researchers combine neuromorphic chips with analog computing, which can process continuous signals, just like real neurons. The resulting chips are vastly different from the current architecture and computing mode of digital-only computers that rely on binary signal processing of 0s and 1s.

With the brain as their guide, neuromorphic chips promise to one day demolish the energy consumption of data-heavy computing tasks like AI. Unfortunately, AI algorithms haven’t played well with the analog versions of these chips because of a problem known as device mismatch: On the chip, tiny components within the analog neurons are mismatched in size due to the manufacturing process. Because individual chips aren’t sophisticated enough to run the latest training procedures, the algorithms must first be trained digitally on computers. But then, when the algorithms are transferred to the chip, their performance breaks down once they encounter the mismatch on the analog hardware.