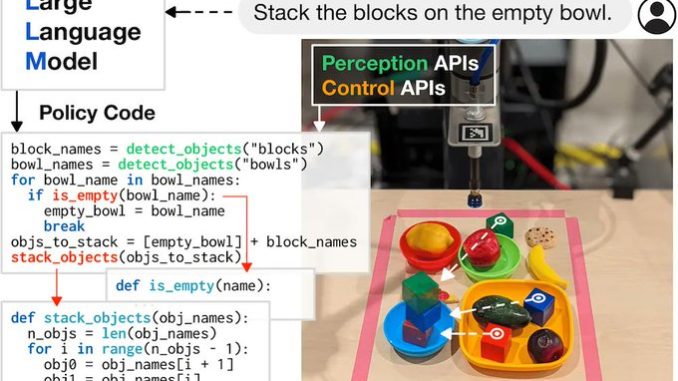

Researchers from Google’s Robotics team have open-sourced Code-as-Policies (CaP), a robot control method that uses a large language model (LL

M) to generate robot-control code that achieves a user-specified goal. CaP uses a hierarchical prompting technique for code generation that outperforms previous methods on the HumanEval code-generation benchmark.

The technique and experiments were described in a paper published on arXiv. CaP differs from previous attempts to use LLMs to control robots; instead of generating a sequence of high-level steps or policies to be invoked by the robot, CaP directly generates Python code for those policies. The Google team developed a set of prompting techniques that improved code-generation, including a new hierarchical prompting method. This technique achieved a new state-of-the art score of 39.8% pass@1 on the HumanEval benchmark. According to the Google team:

Code as policies is a step towards robots that can modify their behaviors and expand their capabilities accordingly. This can be enabling, but the flexibility also raises potential risks since synthesized programs (unless manually checked per runtime) may result in unintended behaviors with physical hardware. We can mitigate these risks with built-in safety checks that bound the control primitives that the system can access, but more work is needed to ensure new combinations of known primitives are equally safe. We welcome broad discussion on how to minimize these risks while maximizing the potential positive impacts towards more general-purpose robots.