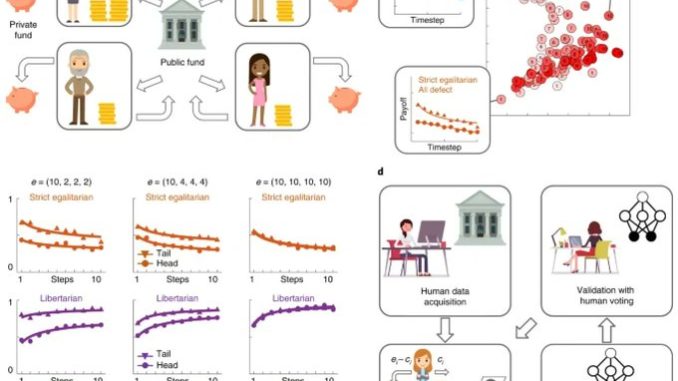

Building artificial intelligence (AI) that aligns with human values is an unsolved problem. Here we developed a human-in-the-loop research pipeline called Democratic AI, in which reinforcement learning is used to design a social mechanism that humans prefer by majority. A large group of humans played an online investment game that involved deciding whether to keep a monetary endowment or to share it with others for collective benefit. Shared revenue was returned to players under two different redistribution mechanisms, one designed by the AI and the other by humans. The AI discovered a mechanism that redressed initial wealth imbalance, sanctioned free riders and successfully won the majority vote. By optimising for human preferences, Democratic AI offers a proof of concept for value-aligned policy innovation.

Imagine that a group of people decide to pool funds to make an investment. The investment pays off, and a profit is made. How should the proceeds be distributed? One simple strategy is to split the return equally among investors. But that might be unfair, because some people contributed more than others. Alternatively, we could pay everyone back in proportion to the size of their initial investment. That sounds fair, but what if people had different levels of assets to begin with? If two people contribute the same amount, but one is giving a fraction of their available funds, and the other is giving them all, should they receive the same share of the proceeds?

This question of how to redistribute resources in our economies and societies has long generated controversy among philosophers, economists and political scientists. Here, we use deep RL as a testbed to explore ways to address this problem.