As computing and AI advancements spanning decades are enabling incredible opportunities for people and society, they’re also raising questions about responsible development and deployment. For example, the machine learning models powering AI systems may not perform the same for everyone or every condition, potentially leading to harms related to safety, reliability, and fairness. Single metrics often used to represent model capability, such as overall accuracy, do little to demonstrate under which circumstances or for whom failure is more likely; meanwhile, common approaches to addressing failures, like adding more data and compute or increasing model size, don’t get to the root of the problem. Plus, these blanket trial-and-error approaches can be resource intensive and financially costly.

-

Learn how this suite of tools can help assess machine learning models through a lens of responsible AI.

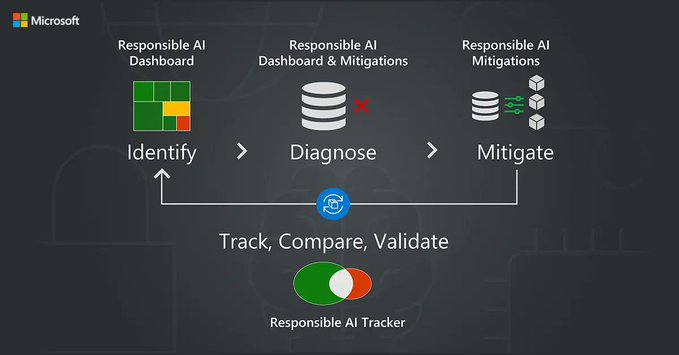

Through its Responsible AI Toolbox, a collection of tools and functionalities designed to help practitioners maximize the benefits of AI systems while mitigating harms, and other efforts for responsible AI, Microsoft offers an alternative: a principled approach to AI development centered around targeted model improvement. Improving models through targeting methods aims to identify solutions tailored to the causes of specific failures. This is a critical part of a model improvement life cycle that not only includes the identification, diagnosis, and mitigation of failures but also the tracking, comparison, and validation of mitigation options. The approach supports practitioners in better addressing failures without introducing new ones or eroding other aspects of model performance.

“With targeted model improvement, we’re trying to encourage a more systematic process for improving machine learning in research and practice,” says Besmira Nushi, a Microsoft Principal Researcher involved with the development of tools for supporting responsible AI. She is a member of the research team behind the toolbox’s newest additions: the Responsible AI Mitigations Library, which enables practitioners to more easily experiment with different techniques for addressing failures, and the Responsible AI Tracker, which uses visualizations to show the effectiveness of the different techniques for more informed decision-making.