In a world where seeing is increasingly no longer believing, experts are warning that society must take a multi-pronged approach to combat the potential harms of computer-generated media.

As Bill Whitaker reports this week on 60 Minutes, artificial intelligence can manipulate faces and voices to make it look like someone said something they never said. The result is videos of things that never happened, called « deepfakes. » Often, they look so real, people watching can’t tell. Just this month, Justin Bieber was tricked by a series of deepfake videos on the social media video platform TikTok that appeared to be of Tom Cruise.

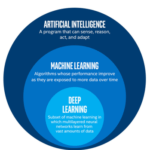

These fabricated videos, named for a combination of the computer science practice known as « deep learning » and « fake, » first arrived on the internet near the end of 2017. The sophistication of deepfakes has advanced rapidly in the ensuing four years, along with the availability of the tools needed to make them.

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.