In July and September, 15 of the biggest AI companies signed on to the White House’s voluntary commitments to manage the risks posed by AI. Among those commitments was a promise to be more transparent: to share information “across the industry and with governments, civil society, and academia,” and to publicly report their AI systems’ capabilities and limitations. Which all sounds great in theory, but what does it mean in practice? What exactly is transparency when it comes to these AI companies’ massive and powerful models?

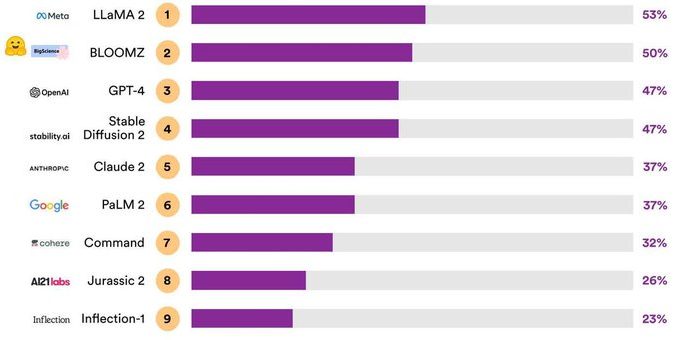

Thanks to a report spearheaded by Stanford’s Center for Research on Foundation Models (CRFM), we now have answers to those questions. The foundation models they’re interested in are general-purpose creations like OpenAI’s GPT-4 and Google’s PaLM 2, which are trained on a huge amount of data and can be adapted for many different applications. The Foundation Model Transparency Index graded 10 of the biggest such models on 100 different metrics of transparency.