On August 20, 2011, Marc Andreessen of a16z, published a pivotal story in the Wall Street Journal: Why Software is Eating the World. Today, September 26, 2022, I’m publishing Why AI Is Eating The Web3 Creator Economy.

You see, it all stems from the advances in machine and deep learning that has exploded on the scene with DALL-E, MidJourney, Stable Diffusion and now more recently with NVIDIA’s announcement about Get3D.

NVIDIA’s new GET3D AI-powered tool will mess with many recent startups who have developed tools and apps that scan objects to populate metaverse worlds.

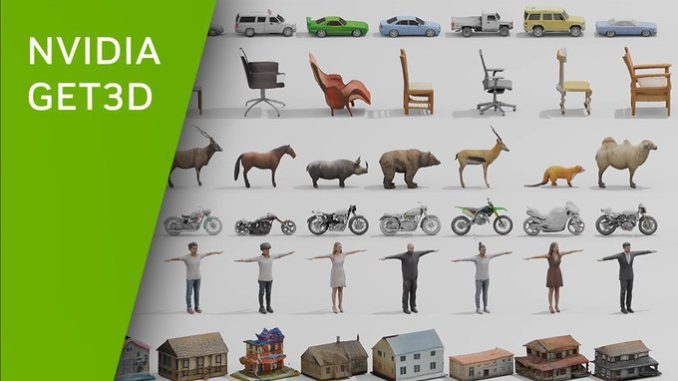

“Trained using only 2D images, NVIDIA GET3D generates 3D shapes with high-fidelity textures and complex geometric details. These 3D objects are created in the same format used by popular graphics software applications, allowing users to immediately import their shapes into 3D renderers and game engines for further editing.”

NVIDIA says it took just two days to feed around 1 million images into GET3D using A100 Tensor Core GPUs — this should give you a sense of the speed and scale that this will disrupt other tools designed to scan everyday objects manually.

Its ability to instantly generate textured 3D shapes could be a game-changer for developers, helping them rapidly populate virtual worlds with varied and exciting objects.

With the help of another NVIDIA AI tool, StyleGAN-NADA, it’s also possible to apply various styles to an object with text-based prompts — so roughing up a building with decay or creating a 4×4 covered with mud would be entirely possible and easily done.

What’s more, is that these images will no doubt end up as USD (Universal Scene Description) file formats which NVIDIA and others are pushing to become one of the interoperable standards for objects.

This means that promise of a democratized creator economy where people can make money from uploading their own images to sell on something like Sketchfab will actually become a thing of the past already.

And if you take at Quixel as an example, their megascan library could be ingested and instantly become redundant as a business.

Between MidJourney, DALL-E, and now Stable Diffusion it won’t be very long before we’ll be able to enter a text prompt and AI will generate a metaverse from it.