Researchers in Shanghai and the United States have created a GAN-based portrait creation system that lets users build new faces with previously unattainable levels of control over specific features, including hair, eyes, spectacles, textures, and color.

The system’s versatility is shown by the fact that it has a Photoshop-style interface. A user can create realistic pictures using semantic segmentation elements, but this element can also be produced directly over existing photographs.

The work’s main contribution is in ‘disentangling’ properties of learned facial traits, such as position and texture, allowing SofGAN to generate faces at indirect angles to the camera viewpoint.

Face form and texture can now be changed as independent entities, thanks to the decoupling of textures from geometry. This permits the alteration of a source face’s race, a scandalous practice that now has a potentially helpful application in creating racially balanced machine learning datasets.

Similar segmentation>image systems like NVIDIA’s GauGAN and Intel’s game-based neural rendering system offer artificial aging and attribute-consistent style adjustment at a granular level that SofGAN does not.

Another advance for SofGAN’s methodology is that it can be trained directly on unpaired real-world photos rather than requiring paired segmentation/real images.

The ‘disentangling’ architecture of SofGAN, according to the researchers, was inspired by standard image rendering algorithms that break down the constituent facets of an image. The composite elements are routinely stripped down to the most minute components in visual effects procedures, with specialists dedicated to each component.

The researchers developed a semantic occupancy field (SOF), an extension of the classic occupancy field that identifies the component aspects of facial portraiture, to achieve this in a machine learning image synthesis framework. The SOF was trained without any ground truth supervision on calibrated multi-view semantic segmentation maps.

In addition, 2D segmentation maps are created by ray-tracing the SOF output before using a GAN generator to texture it. The synthetic semantic segmentation maps are additionally encoded in a low-dimensional space using a three-layer encoder to ensure that the output remains consistent when the viewpoint changes.

The hyper-net, a ray marcher, and a classifier are the three trainable sub-modules that make up the SOF. In some ways, the project’s Semantic Instance Wised (SIW) StyleGAN generator is set up similarly to StyleGAN2. Random scaling and cropping enhance the data, and training includes path regularisation every four steps. On four RTX 2080 Ti GPUs running CUDA 10.1, the complete training method took 22 days to reach 800,000 iterations.

The report does not specify the configuration of the 2080 cards, which can hold between 11 and 22 GB of VRAM apiece, implying that the total VRAM used to train SofGAN for the better part of a month is between 44 and 88 GB.

The researchers found that acceptable generalized, high-level findings appeared early in training, at 1500 iterations, three days in. The rest of the training followed a steady, predictable crawl towards small details like hair and eye facets.

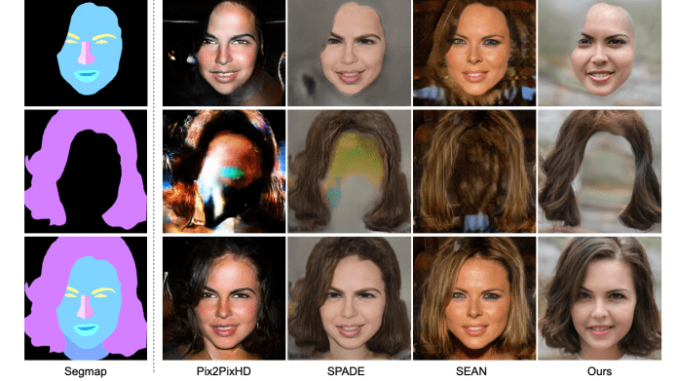

SofGAN, in comparison to alternative approaches such as Nvidia’s SPADE, Pix2PixHD, and SEAN, provides more realistic results from a single segmentation map.

Paper: https://arxiv.org/abs/2007.03780

Project: https://apchenstu.github.io/sofgan/

Code: https://github.com/apchenstu/sofgan