As artificial intelligence becomes a part of everyday life, developers need to make sure that the models it learns from provide an accurate reflection of the real world.

People often think of artificial intelligence as just code – cold, lifeless and objective. In important ways, however, AI is more like a child. It learns from the data it is exposed to and optimises based on objectives that are established by its developers, who in this analogy would be its ‘parents’.

Like a young child, AI doesn’t know about the history or societal dynamics that have shaped the world to be the way it is. And just as children sometimes make strange or inappropriate remarks without knowing any better, AI learns patterns from the world naively without understanding the broader sociotechnical context that underlies the data it learns from.

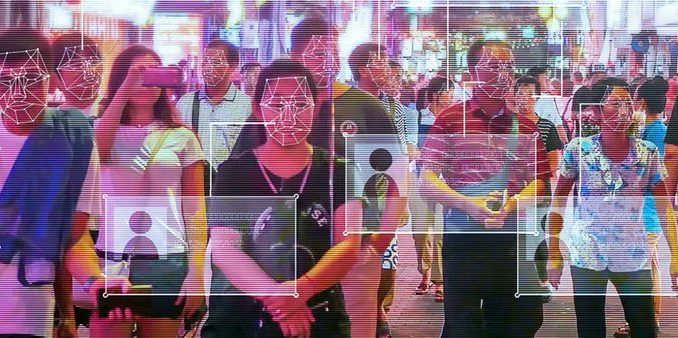

Unlike children, however, AI is increasingly being asked to make decisions in high-stakes contexts, including finding criminal suspects, informing loan decisions and assisting medical diagnoses. For AI ‘parents’ who want to make sure their ‘children’ do not learn to reflect societal biases and act in a discriminatory manner, it is important to consider diversity throughout the development process.