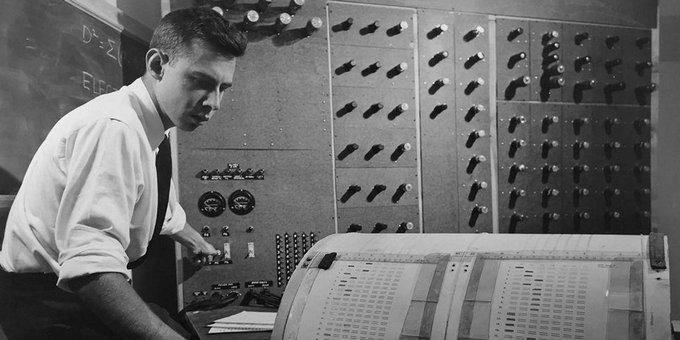

IN THE SUMMER OF 1956, a group of mathematicians and computer scientists took over the top floor of the building that housed the math department of Dartmouth College. For about eight weeks, they imagined the possibilities of a new field of research. John McCarthy, then a young professor at Dartmouth, had coined the term « artificial intelligence » when he wrote his proposal for the workshop, which he said would explore the hypothesis that « every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. »

The researchers at that legendary meeting sketched out, in broad strokes, AI as we know it today. It gave rise to the first camp of investigators: the « symbolists, » whose expert systems reached a zenith in the 1980s. The years after the meeting also saw the emergence of the « connectionists, » who toiled for decades on the artificial neural networks that took off only recently. These two approaches were long seen as mutually exclusive, and competition for funding among researchers created animosity. Each side thought it was on the path to artificial general intelligence.

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.