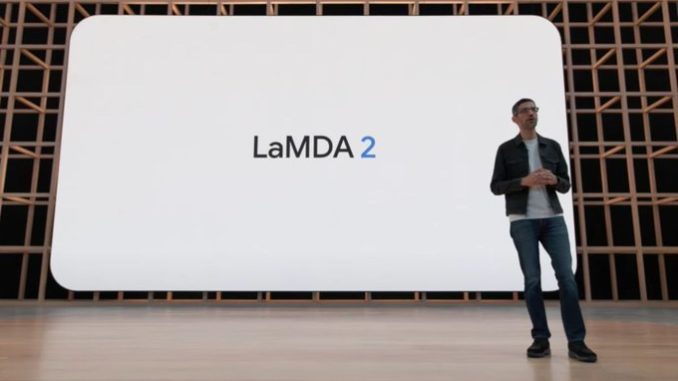

The past week has seen a frenzy of articles, interviews, and other types of media coverage about Blake Lemoine, a Google engineer who told The Washington Post that LaMDA, a large language model created for conversations with users, is “sentient.”

After reading a dozen different takes on the topic, I have to say that the media has become (a bit) disillusioned with the hype surrounding current AI technology. A lot of the articles discussed why deep neural networks are not “sentient” or “conscious.” This is an improvement in comparison to a few years ago, when news outlets were creating sensational stories about AI systems inventing their own language, taking over every job, and accelerating toward artificial general intelligence.

But the fact that we’re discussing sentience and consciousness again underlines an important point: We are at a point where our AI systems—namely large language models—are becoming increasingly convincing while still suffering from fundamental flaws that have been pointed out by scientists on different occasions.