One of the most long-standing goals of AI has been to make a robot that can follow a diverse array of Natural language instructions. Though recent research is quite abundant in this field, very few, have been able to produce a robot that is present in the real world and can follow a wide variety of instructions. We are interested in the ability to obey directions given in interactive Language, which entails that the robot can respond skillfully and immediately to new natural language instructions given during current task performance.

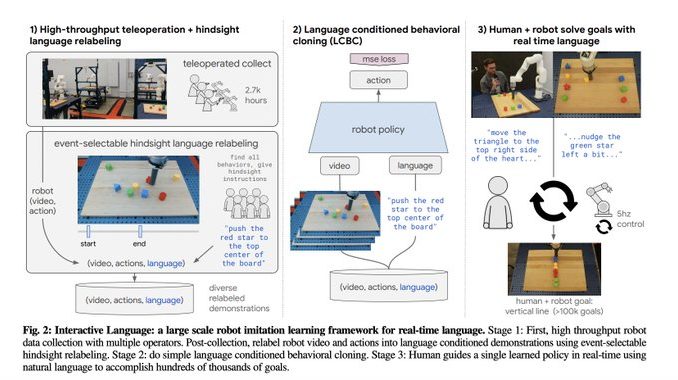

Recently, researchers at Google research showed a method for creating real-world, real-time-interactive, natural-language-instructable robots that, according to some metrics, operate at a scale that is an order of magnitude larger than previous works.

This robot operates in a setting that the researchers created to present a manageable yet challenging level of action (pixel-based perception, feedback-rich control, a variety of objects, and confusing natural language commands). They framed real-time linguistic guidance as a significant issue with imitation learning.

They demonstrated that the robot could perform intricate long-horizon rearrangements, such as “place the blocks into a happy face with green eyes” (see the figures), which call for many minutes of deftly coordinated handling and only occasionally require human natural-language feedback.