Efficient algorithms and methods in machine learning for AI accelerators — NVIDIA GPUs, Intel Habana Gaudi and AWS Trainium and Inferentia

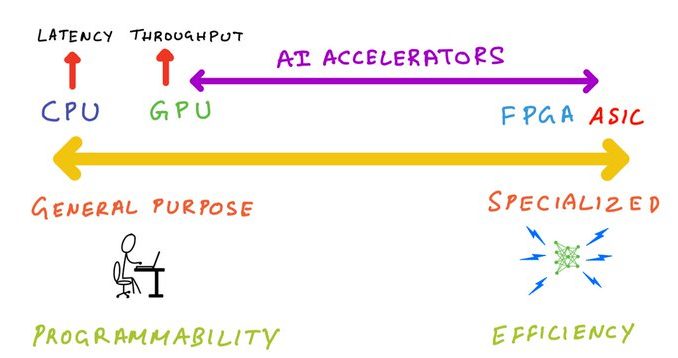

Chances are you’re reading this blog post on a modern computer that’s either on your lap or on the palm of your hand. This modern computer has a central processing unit (CPU) and other dedicated chips for specialized tasks such as graphics, audio processing, networking and sensor fusion and others. These dedicated processors can perform their specialized tasks much faster and more efficiently than a general purpose CPU.

We’ve been pairing CPUs with a specialized processor since the early days of computing. The early 8-bit and 16-bit CPUs of the 70s were slow at performing floating-point calculation as they relied on software to emulate floating-point instructions. As applications like computer aided design (CAD), engineering simulations required fast floating-point operations, the CPU was paired with a math coprocessor and it could offload all floating point calculations to this dedicated coprocessor. The math coprocessor is an example of a specialized processor designed to do a specific task (floating-point arithmetic) much more quickly and efficiently than the CPU.