To quench the thirst for ever larger AI and machine learning models, Tesla has revealed a wealth of details at Hot Chips 34 on their fully custom supercomputing architecture called Dojo.

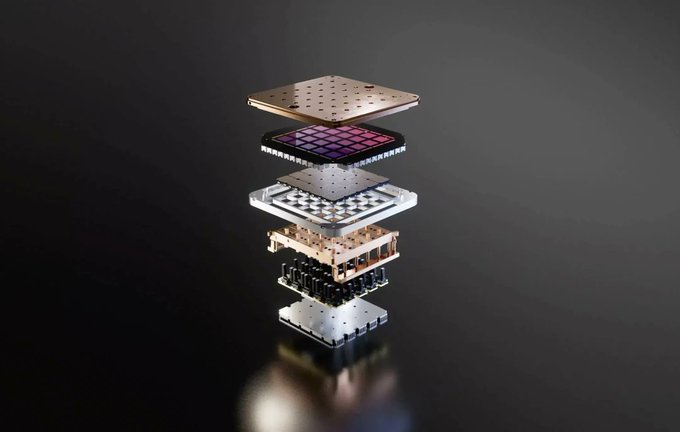

The system is essentially a massive composable supercomputer, although unlike what we see on the Top 500, it’s built from an entirely custom architecture that spans the compute, networking, and input/output (I/O) silicon to instruction set architecture (ISA), power delivery, packaging, and cooling. All of it was done with the express purpose of running tailored, specific machine learning training algorithms at scale.

« Real world data processing is only feasible through machine learning techniques, be it natural-language processing, driving in streets that are made for human vision to robotics interfacing with the everyday environment, » Ganesh Venkataramanan, senior director of hardware engineering at Tesla, said during his keynote speech.

However, he argued that traditional methods for scaling distributed workloads have failed to accelerate at the rate necessary to keep up with machine learning’s demands. In effect, Moore’s Law is not cutting it and neither are the systems available for AI/ML training at scale, namely some combination of CPU/GPU or in rarer circumstances by using speciality AI accelerators.

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.