- Neural network subspaces contain diverse solutions that can be ensembled, approaching the ensemble performance of independently trained networks without the training cost.

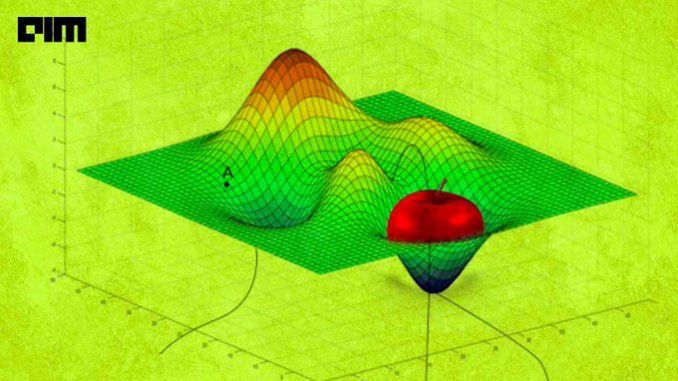

- Researchers have consistently demonstrated the positive correlation between the depth of a neural network and its capability to achieve high accuracy solutions. However, quantifying such an advantage still eludes the researchers, as it is still unclear how many layers one would need to make a certain prediction accurately. So, there is always a risk of incorporating complicated DNN architectures that exhaust the computational resources and make the whole training process a costly affair. Hence, removing neural network redundancy in whichever way possible led researchers to probe the abysses of neural network topology– subspaces.Interest in neural network subspaces has been prevalent for over a couple of decades now, but their significance has become more obvious with the increasing size of deep neural networks. Apple’s machine learning team, especially, has recently showcased their work on neural network subspace at this year’s ICML conference. In this work, the researchers showed how neural network subspaces contain diverse solutions that can be used to train neural networks without the training cost independently.