Recently, Google has built one of the most secure and robust cloud infrastructures for processing data and making our services better, known as Federated Learning.

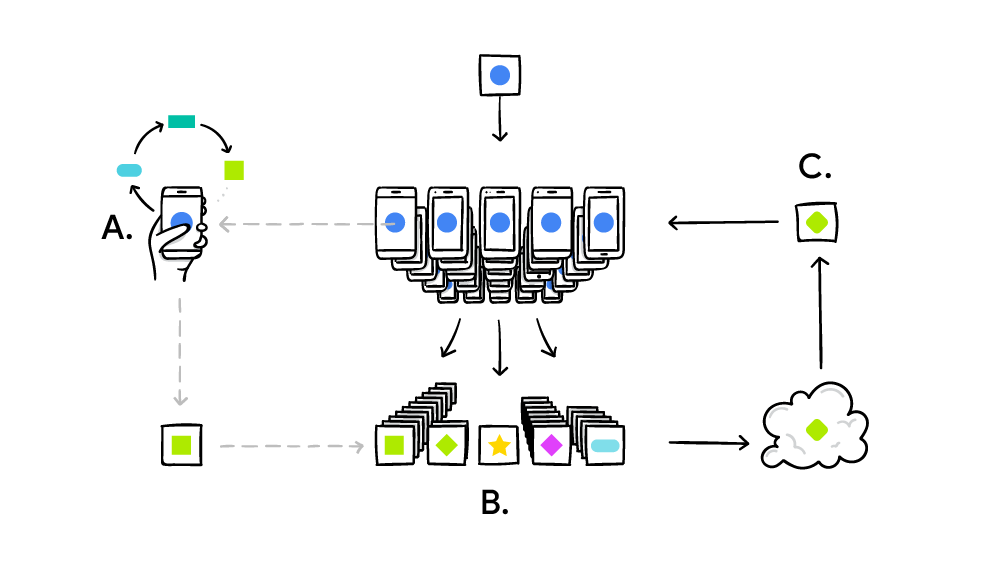

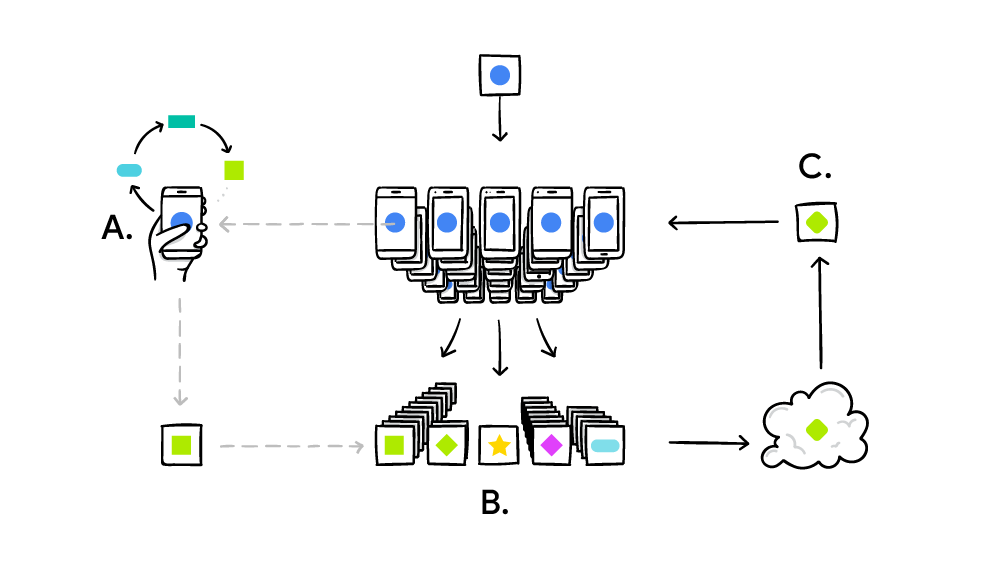

In Federated Learning, a model is trained from user interaction with mobile devices. Federated Learning enables mobile phones to collaboratively learn over a shared prediction model while keeping all the training data on the device, changing the ability to perform machine learning techniques by the need to store the data on the cloud. This method goes beyond the use of local models that make predictions based on mobile device APIs like the Mobile Vision API or the On-Device Smart Reply, bringing model training to the device as well. A device downloads the current model improves it by learning from data from the phone it is present in and then summarizes the changes as a small focused update. Only this little update on the model is sent to the cloud, using an encrypted communication method, where it is immediately averaged with other user updates to improve the shared model.

All the other training data remains on the particular device, and no individual updates are stored in the cloud. Federated Learning eases the distribution of the training of models across several devices, the technique also makes it possible to take advantage of machine learning while minimizing the need and effort to collect user data. Such models make it possible to perform on-device inference. Tech conglomerates today are trying to bring their machine learning applications to the user’s devices to improve privacy and stability at the same time.

How can it be used?

The Federated Learning model downloads the current model and computes an updated model on the device itself through the use of edge computing and in turn using local data. These locally trained models are then sent from the devices back to the central server where they are aggregated, i.e. processes such as averaging of weights are performed and then a single consolidated and improved global model is sent back to the target devices. Federated Learning allows for machine learning algorithms to gain experience from a broad range of data sets even if they are present at different locations.

This approach enables multiple organizations to collaborate on the development of a model, without the need to directly share secure data with each other. Over the course of several repetitive training iterations, the shared models get exposed to a significantly wider range of data than what they would on any single organization. Federated Learning, therefore, decentralizes machine learning by removing the need to pool data into a single location. Instead, the same model is trained through multiple iterations at different locations. The potential hazard of sharing sensitive clinical data with each other is completely eliminated.

The Federated Learning Process

During initial training, the learning method returns a trained model back to the server. Popular machine learning algorithms such as deep neural networks and support vector machines could be parameters for the purpose of analysis. Once trained, it encodes with the statistical patterns of data in numerical parameters and they no longer need the training data for inference. So when the device sends the trained model back to the server, it does not contain raw user data. Once the server receives the data from its user devices, it updates the base model with aggregated parameter values of user trained models. This federated learning cycle must be repeated several times before the model reaches an optimal level of accuracy that might be satisfactory enough for the developer and his expectations. Once the model is finally prepared and ready, it can be distributed across all users at once for on-device inference.

Practical Paradigms of using Federated Learning

Several Federated learning tasks, such as federated training or evaluation with existing machine learning models can be easily implemented using TensorFlow. Using TensorFlow, Federated Learning can be implemented even without requiring prior knowledge of how it works under the hood, and also offers components to evaluate the implemented Federated Learning algorithms on a variety of existing models and data.

The interfaces offered consist of the following three key parts:

In Federated Learning, a model is trained from user interaction with mobile devices. Federated Learning enables mobile phones to collaboratively learn over a shared prediction model while keeping all the training data on the device, changing the ability to perform machine learning techniques by the need to store the data on the cloud. This method goes beyond the use of local models that make predictions based on mobile device APIs like the Mobile Vision API or the On-Device Smart Reply, bringing model training to the device as well. A device downloads the current model improves it by learning from data from the phone it is present in and then summarizes the changes as a small focused update. Only this little update on the model is sent to the cloud, using an encrypted communication method, where it is immediately averaged with other user updates to improve the shared model.

All the other training data remains on the particular device, and no individual updates are stored in the cloud. Federated Learning eases the distribution of the training of models across several devices, the technique also makes it possible to take advantage of machine learning while minimizing the need and effort to collect user data. Such models make it possible to perform on-device inference. Tech conglomerates today are trying to bring their machine learning applications to the user’s devices to improve privacy and stability at the same time.

How can it be used?

The Federated Learning model downloads the current model and computes an updated model on the device itself through the use of edge computing and in turn using local data. These locally trained models are then sent from the devices back to the central server where they are aggregated, i.e. processes such as averaging of weights are performed and then a single consolidated and improved global model is sent back to the target devices. Federated Learning allows for machine learning algorithms to gain experience from a broad range of data sets even if they are present at different locations.

This approach enables multiple organizations to collaborate on the development of a model, without the need to directly share secure data with each other. Over the course of several repetitive training iterations, the shared models get exposed to a significantly wider range of data than what they would on any single organization. Federated Learning, therefore, decentralizes machine learning by removing the need to pool data into a single location. Instead, the same model is trained through multiple iterations at different locations. The potential hazard of sharing sensitive clinical data with each other is completely eliminated.

The Federated Learning Process

During initial training, the learning method returns a trained model back to the server. Popular machine learning algorithms such as deep neural networks and support vector machines could be parameters for the purpose of analysis. Once trained, it encodes with the statistical patterns of data in numerical parameters and they no longer need the training data for inference. So when the device sends the trained model back to the server, it does not contain raw user data. Once the server receives the data from its user devices, it updates the base model with aggregated parameter values of user trained models. This federated learning cycle must be repeated several times before the model reaches an optimal level of accuracy that might be satisfactory enough for the developer and his expectations. Once the model is finally prepared and ready, it can be distributed across all users at once for on-device inference.

Practical Paradigms of using Federated Learning

Several Federated learning tasks, such as federated training or evaluation with existing machine learning models can be easily implemented using TensorFlow. Using TensorFlow, Federated Learning can be implemented even without requiring prior knowledge of how it works under the hood, and also offers components to evaluate the implemented Federated Learning algorithms on a variety of existing models and data.

The interfaces offered consist of the following three key parts:

- Models: Classes and helper functions that allow to wrap the existing models for use with TFF. Wrapping a model can be done by calling a single wrapping function i.e tff.learning.from_keras_model, or defining a subclass of the tff.learning.Model interface for full customizability.

- Federated Computation Builders: These are helper functions that help construct federated computations for training or evaluation, using the existing models.

- Datasets: Collections of data that you can download and access in Python for use in simulating federated learning scenarios. Although federated learning is designed to be used with decentralized data that cannot be simply downloaded at a centralized location, to conduct initial experiments for research purposes, data that can be downloaded and manipulated locally, which becomes especially useful for developers. Two examples of testing with datasets are image classification and text generation.

Source: https://analyticsindiamag.com/a-beginners-guide-to-federated-learning/