Deep neural networks have been responsible for much of the advances in machine learning over the last decade. Many of these networks, especially the best-performing ones, necessitate massive amounts of computing and memory. These restrictions not only raise infrastructure costs but also complicate network implementation in resource-constrained contexts like mobile phones and smart devices.

Neural network pruning, which comprises methodically eliminating parameters from an existing network, is a popular approach for minimizing the resource requirements at test time. The goal of neural network pruning is to convert a large network to a smaller network with equivalent accuracy. Here in this article, we will discuss the important points related to neural network pruning. The major points to be covered in this article are listed below.

- Need of Inference Optimization

- What is Neural Network Pruning?

- Types of Pruning

- Advantages and Disadvantages

Let’s proceed with our discussion.

Need of Inference optimization

As we know that an efficient model is that model which optimizes memory usage and performance at the inference time. Deep Learning model inference is just as crucial as model training, and it is ultimately what determines the solution’s performance metrics. Once the deep learning model has been properly trained for a given application, the next stage is to guarantee that the model is deployed into a production-ready environment, which requires both the application and the model to be efficient and dependable.

Maintaining a healthy balance between model correctness and inference time is critical. The running cost of the implemented solution is determined by the inference time. It’s crucial to have memory-optimized and real-time (or lower latency) models since the system where your solution will be deployed may have memory limits.

Looking for a job change? Let us help you.

Developers are looking for novel and more effective ways to reduce the computing costs of neural networks as image processing, finance, facial recognition, facial authentication, and voice assistants all require real-time processing. Pruning is one of the most used procedures.

What is Neural Network Pruning?

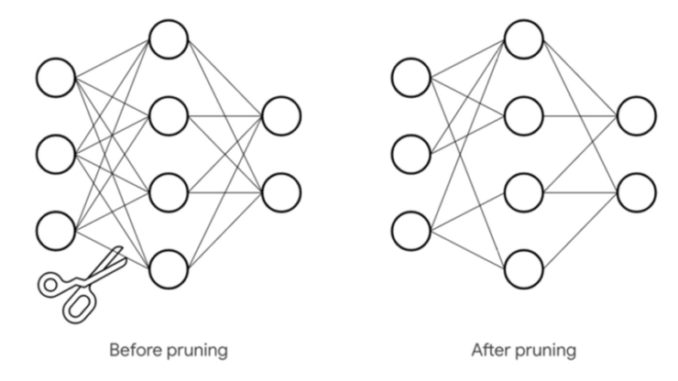

Pruning is the process of deleting parameters from an existing neural network, which might involve removing individual parameters or groups of parameters, such as neurons. This procedure aims to keep the network’s accuracy while enhancing its efficiency. This can be done to cut down on the amount of computing power necessary to run the neural network.

Steps to be followed while pruning:

- Determine the significance of each neuron.

- Prioritize the neurons based on their value (assuming there is a clearly defined measure for “importance”).

- Remove the neuron that is the least significant.

- Determine whether to prune further based on a termination condition (to be defined by the user).

Every method that has been invented or we are applying for a long time has some guidelines. By following those guidelines we can ensure the result of the method as expected. The research paper LOST IN PRUNING: THE EFFECTS OF PRUNING NEURAL NETWORKS BEYOND TEST ACCURACY shown certain guidelines for pruning those are as below:

- If unanticipated adjustments in data distribution may occur during deployment, don’t prune.

- If you only have a partial understanding of the distribution shifts throughout training and pruning, prune moderately.

- If you can account for all movements in the data distribution throughout training and pruning, prune to the maximum extent possible.

- When retraining, specifically consider data augmentation to maximize the prune potential.

Types of Pruning

Pruning can take many different forms, with the approach chosen based on our desired output. In some circumstances, speed takes precedence over memory, whereas in others, memory is sacrificed. The way sparsity structure, scoring, scheduling, and fine-tuning are handled by different pruning approaches.

Structured and Unstructured Pruning

Individual parameters are pruned using an unstructured pruning approach. This results in a sparse neural network, which, while lower in terms of parameter count, may not be configured in a way that promotes speed improvements.

Randomly zeroing out the parameters saves memory but may not necessarily improve computing performance because we end up conducting the same number of matrix multiplications as before.

Because we set specific weights in the weight matrix to zero, this is also known as Weight Pruning.

Structured and Unstructured Pruning

To make use of technology and software that is specialized for dense processing, structured pruning algorithms consider parameters in groups, deleting entire neurons, filters, or channels. We set entire columns in the weight matrix to zero, thus removing the matching output neuron. This is also known as Unit/Neuron Pruning.

In a feedforward layer, for example, part of the Convolutional NN channels or neurons are deleted, resulting in a direct reduction in computation.

Scoring

Pruning strategies may differ in the method used to score each parameter, which is used to choose one parameter above another. The absolute magnitude scoring approach is the standard, however, a new scoring method could be developed to improve the efficiency of pruning.

It is standard practice to assign values to parameters based on their absolute values, trained importance coefficients, or contributions to network activations or gradients. Some pruning algorithms compare scores locally, trimming a subset of the parameters with the lowest scores across all layers.

Others look at scores in a broader sense, comparing them to one another regardless of where the parameter is located in the network.

Iterative Pruning and Fine Tuning

Some methods prune the desired amount all at once, which is often referred to as One-shot Pruning, certain systems, known as Iterative Pruning, repeat the process of pruning the network to some extent, and retraining it until the desired pruning rate is obtained.

For approaches that require fine-tuning, it is most typical to continue training the network with the weights that were trained before pruning. Other proposals include rebinding the network to an earlier state or reinitializing the network.

Scheduling

It defines the ratio of pruning after each epoch together with the number of epochs required to train the model every time it is pruned. When it comes to pruning procedures, there are differences in the quantity of the network to be pruned at each step.

Most weights are removed in a single pass with some approaches. Iteratively, others prune a constant fraction of the network, while yet others use a more complicated function that changes the pace of pruning over time.

Advantages

- Reduces the inference and training time, depends on compression method and of course hardware

- As the neurons, connections between layers and weights are reduced, there is a reduction in storage requirement

- Reduces the heat dissipation in deployed hardware say mobile phones

- Power Saving

Disadvantages

- Fewer pre-trained models and versions are available

- Difficulty in selection compression method as we have to know the architecture of targeted hardware

- Not much quantify beyond original accuracy

Conclusion

Neural network pruning is quite an old technique, recent advancement, development, and wide research community raise its popularity. Though it is a very powerful technique when you’re aiming to reduce the inference time and have limited hardware resources such as memory constraints. In this article, we have understood why there is a need to reduce inference time and various pruning methods. Lastly seen advantages and disadvantages of pruning.