An AI system built by software engineers at MIT’s Lincoln Laboratory automatically detects and analyses social media accounts that spread disinformation across a network — with startling results.

The Reconnaissance of Influence Operations (RIO) program has created a system that automatically detects disinformation campaigns, as well as those individuals who are spreading false narratives on social media networks.

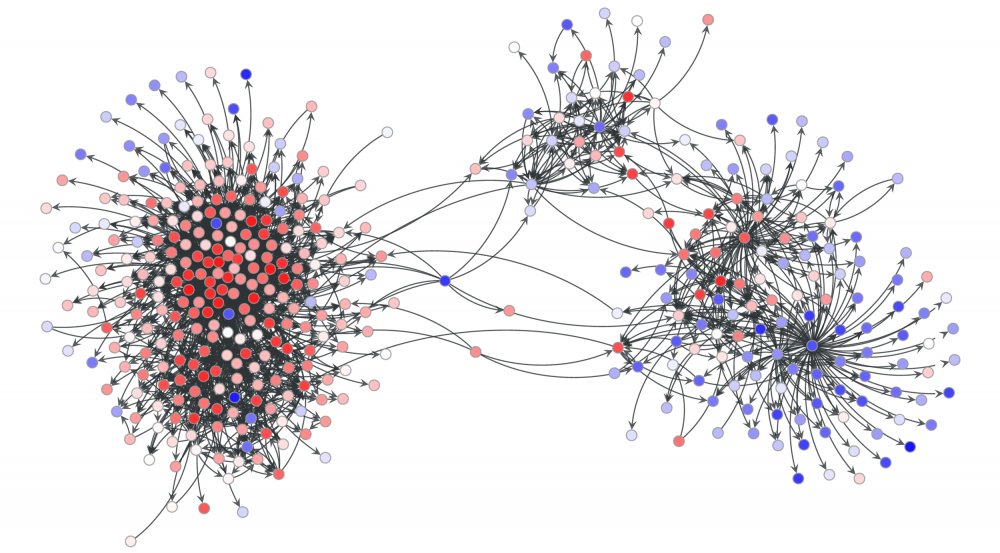

Researchers demonstrated RIO’s capability on real-world hostile influence operation campaigns on Twitter collected during the 2017 French presidential elections. They found their system could detect influence operation accounts with 96 per cent precision, 79 per cent recall and 96 percent area under the precision-recall curve.

RIO also mapped out salient network communities and discovered high-impact accounts that escape the lens of traditional impact statistics based on activity counts and network centrality.

In addition to the suspicious French election accounts, their dataset included known influence operations accounts disclosed by Twitter over a broad range of disinformation campaigns between May 2007 to February 2020 — in total, more than 50,000 accounts across 17 countries, and different account types including both trolls and bots.

Dr Steven Thomas Smith is a senior staff member in the Artificial Intelligence Software Architectures and Algorithms Group at the Massachusetts Institute of Technology (MIT) Lincoln Laboratory. He told create that the project was built using open source machine learning (ML), publicly available application programming interfaces (APIs), the lab’s own algorithms for ML features, as well as network causal inference to compute influence.

“Causal inference is a statistical method to infer outcomes in scenarios, like epidemiology and influence operations, where only one action can be taken,” he explained. “For example, this might be whether to take or not take medication, post or not post information — instances where the counterfactual action cannot be observed.”

The team determined both disinformation and influence in their algorithm through external sources.

“We rely upon independent authorities for our disinformation sources, specifically, the US House Intelligence Committee, data provided by Twitter’s Elections Integrity project, and independent reporting,” he said.

“Influence is inferred using a network causal inference algorithm that measures the relative amount of content spread by a specific account over a network, compared to the amount that would have spread had the account not participated.”

As for further applications for RIO, Smith said there are a number of directions for the researchers to consider.

“We are studying enhanced machine learning models that incorporate multimedia features, improved narrative models, and recently introduced ‘transformer’ models for natural language processing,” he said.

Transformer is a deep learning neural network model that has proven to be especially effective for common natural language processing tasks. It adopts an attention mechanism which weighs the influence of different parts of the input data. To date, it has been used primarily in the field of natural language processing (NLP), and is expected to become more widely used to understand video.

Smith added that using the system to forecast how different countermeasures might halt the spread of a particular disinformation campaign, for example, is yet to be determined.

“Causal inference could also be applied to forecast the outcome of specific actions or countermeasures, but we have not demonstrated this and it is an open research question,” he said.

Digital hydra

University of Melbourne School of Computing and Information Systems Lecturer David Eccles said that the RIO program is a significant step forward in automated defences using machine learning.

“To date, much of the research has been on identifying fake news in social media posts, rather than the accounts that promote and share the fake news posts,” he told create.

One clear benefit of using AI to combat disinformation is that faster detection of user accounts should flow through into lower numbers of social media users exposed to fake news.

“However, we need to be mindful that fake news on social media is not one problem — it is many problems and has been labelled a digital hydra,” he added.

Unsurprisingly, the MIT lab aren’t the only engineers looking to solve the problem of disinformation.

“In general, there are two approaches to the problem: faster automated detection and user empowerment,” Eccles said.

“My work overlaps with MIT’s RIO work in that it focuses on identifying user accounts, rather than the posts they create and share. Where my research differs is that it is focused on user empowerment to develop skills prior to exposure.”

He points to the work of the University of Oxford Internet Institute’s Programme on Democracy and Technology, which focuses on the direct and real threat to democratic processes, such as elections, as well as democratic institutions and social media’s role as a tool of information warfare.

“Disinformation on social media is an established threat to democratic institutions and processes and governments themselves are now being directly impacted by the threat,” he said.

An information war on many fronts

Of course, for all of the good work being done by engineers in using AI to combat fake news, computer models can also be used to supercharge disinformation.

“AI, in terms of machine learning and natural language processing, is already actively involved in disinformation and its prevention on social media,” Eccles said.

“Social media users are in the middle of an algorithmic arms race between those trying to detect and prevent, and those weaponising social media.”

He notes one particularly dangerous recent fake news campaign, where he won’t mention the specific disinformation as repeating it helps reinforce its message.

“Bot accounts have been utilising NLP and ML to pick up trending topics on COVID-19, and recycle misinformation previously used on Zika and Ebola virus outbreaks,” he said.

Eccles said governments will have to legislate to bring greater accountability to social media platforms to act against disinformation — whether that is human or automated “bot” accounts.

“Detecting user accounts is a significant step in the right direction in reducing the total volume of disinformation on social media. Social media is a business and currently they have no motivation to change the profitable model,” he said.

“The only defenses we have are user empowerment, and faster automated detection of posts and offending social media accounts.”

Source : https://createdigital.org.au/artificial-intelligence-battle-against-fake-news/

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.