The deep learning world of artificial intelligence is obsessed with size.

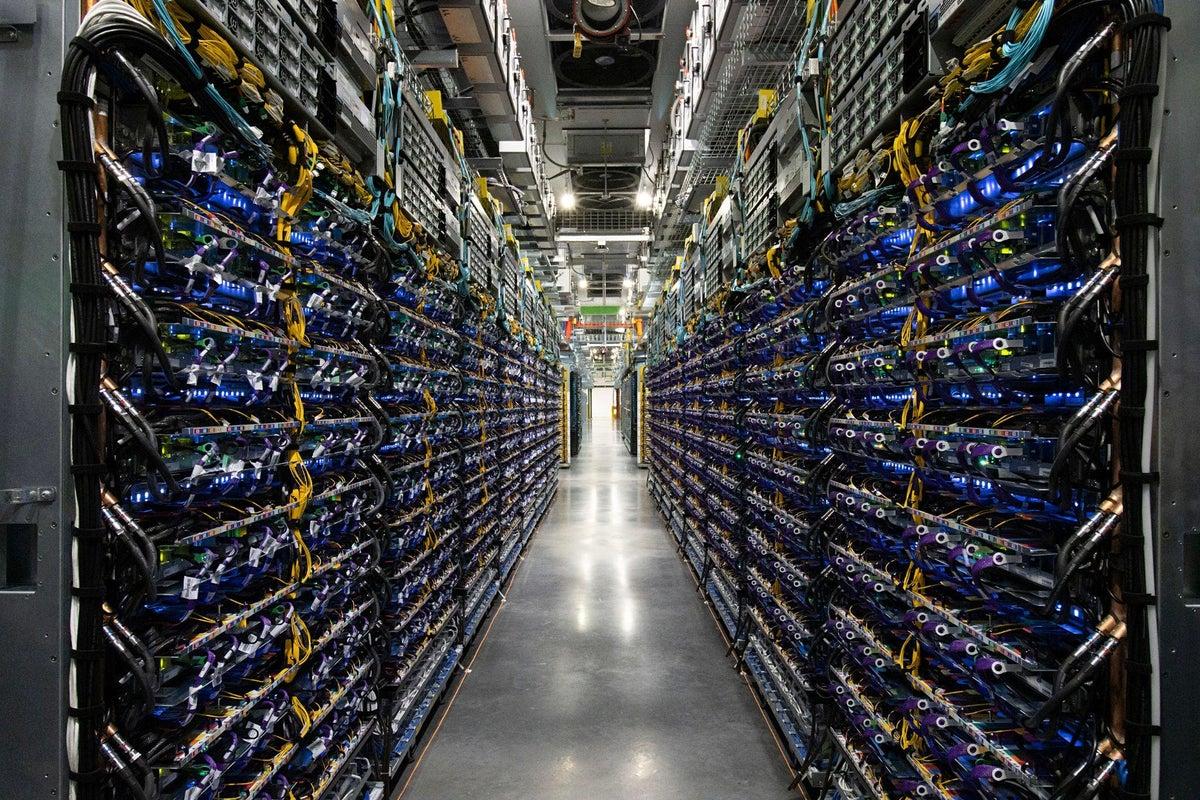

Deep learning programs, such as OpenAI’s GPT-3, continue using more and more GPU chips from Nvidia and AMD — or novel kinds of accelerator chips — to build ever-larger software programs. The accuracy of the programs increases with size, researchers contend.

That obsession with size was on full display Wednesday in the latest industry benchmark results reported by MLCommons, which sets the standard for measuring how quickly computer chips can crunch deep learning code.

Google decided not to submit to any standard benchmark tests of deep learning, which consist of programs that are well-established in the field but relatively outdated. Instead, Google’s engineers showed off a version of Google’s BERT natural language program, which no other vendor used.

MLPerf, the benchmark suite used to measure performance in the competition, reports results for two segments: the standard « Closed » division, where most vendors compete on well-established networks such as ResNet-50; and the « Open » division, which lets vendors try out non-standard approaches.

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.