- A new paper explains that we’ll have to be careful and thorough when programming future AI, or it could have dire consequences for humanity.

- The paper lays out the specific dangers and the “assumptions” we can definitively make about a certain kind of self-learning, reward-oriented AI.

- We have the tools and knowledge to help avoid some these problems—but not all of them—so we should proceed with caution.

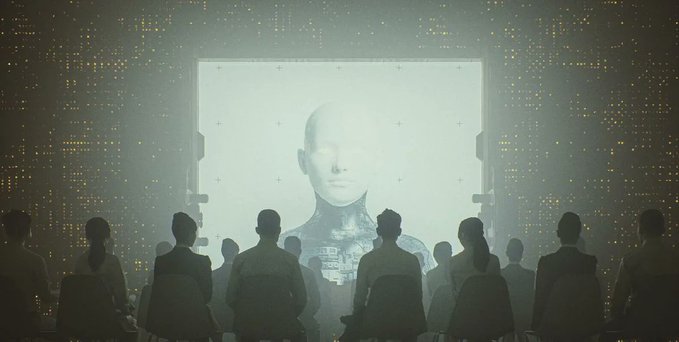

In new research, scientists tackle one of our greatest future fears head-on: What happens when a certain type of advanced, self-directing artificial intelligence (AI) runs into an ambiguity in its programming that affects the real world? Will the AI go haywire and begin trying to turn humans into paperclips, or whatever else is the extreme reductio ad absurdum version of its goal? And, most importantly, how can we prevent it?

In their paper, researchers from Oxford University and Australian National University explain a fundamental pain point in the design of AI: “Given a few assumptions, we argue that it will encounter a fundamental ambiguity in the data about its goal. For example, if we provide a large reward to indicate that something about the world is satisfactory to us, it may hypothesize that what satisfied us was the sending of the reward itself; no observation can refute that.”

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.