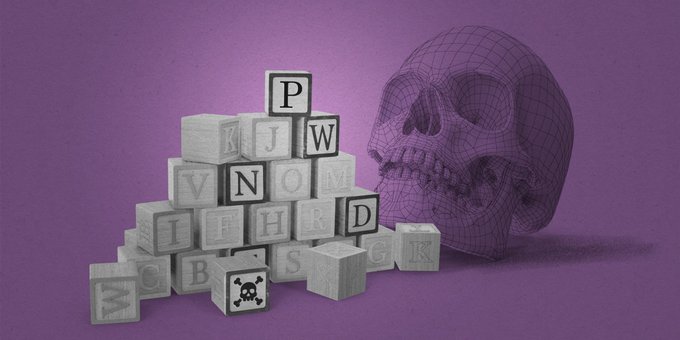

Large language models are full of security vulnerabilities, yet they’re being embedded into tech products on a vast scale.

AI language models are the shiniest, most exciting thing in tech right now. But they’re poised to create a major new problem: they are ridiculously easy to misuse and to deploy as powerful phishing or scamming tools. No programming skills are needed. What’s worse is that there is no known fix.

Tech companies are racing to embed these models into tons of products to help people do everything from book trips to organize their calendars to take notes in meetings.

But the way these products work—receiving instructions from users and then scouring the internet for answers—creates a ton of new risks. With AI, they could be used for all sorts of malicious tasks, including leaking people’s private information and helping criminals phish, spam, and scam people. Experts warn we are heading toward a security and privacy “disaster.”

Here are three ways that AI language models are open to abuse.

Mots-clés : cybersécurité, sécurité informatique, protection des données, menaces cybernétiques, veille cyber, analyse de vulnérabilités, sécurité des réseaux, cyberattaques, conformité RGPD, NIS2, DORA, PCIDSS, DEVSECOPS, eSANTE, intelligence artificielle, IA en cybersécurité, apprentissage automatique, deep learning, algorithmes de sécurité, détection des anomalies, systèmes intelligents, automatisation de la sécurité, IA pour la prévention des cyberattaques.